A Hitch-Hacker’s Guide to the Galaxy – Developing a Cyber Security Roadmap for Executive Leaders

A Hitch-Hacker’s Guide to the Galaxy – Developing a Cyber Security Roadmap for Executive Leaders

In this blog series, I am looking at steps that your organisation can take to build a roadmap for navigating the complex world of cyber security and improving your cyber security posture.

There’s plenty of technical advice out there for helping security and IT teams who are responsible for delivering this for their organisations. Where this advice is lacking is for executive leaders who may or may not have technical backgrounds but are responsible for managing the risk to their organisations and have to make key decisions to ensure they are protected.

This blog series aims to meet that need, and provide you with some tools to create a roadmap for your organisation to follow to deliver cyber security assurance.

Each post focuses on one aspect to consider in your planning, and each forms a part of the Cyber Security Assessment service which we offer to our member organisations in the UK Higher and Further Education sector, as well as customers within Local Government, Multi-Academy Trusts, Independent Schools and public and private Research and Innovation. To find out more about this service, please contact your Relationship Manager, or contact us directly using the link above.

View all episodes.

Episode 7: Known unknowns

“All through my life I’ve had this strange unaccountable feeling that something was going on in the world, something big, even sinister, and no one would tell me what it was.”

“No,” said the old man, “that’s just perfectly normal paranoia. Everyone in the Universe has that.”

Douglas Adams, A Hitchhiker’s Guide to the Galaxy

[ Reading time: 11 minutes ]

Back in Episode 2 (Know thyself) I recalled the then US Secretary of Defense Donald Rumsfeld’s now famous quote about “known unknowns” and “unknown unknowns”!

The essence of it is that there are risks you are aware of—known unknowns—and risks that come from situations that are so unexpected that they are not in the scope of consideration—unknown unknowns.

One of the challenges of managing cyber risk is that it’s intangible, and hard to know what normal looks like, let alone good.

To be able to properly understand and manage the risk, we need to find ways of usefully measuring it.

There are a number of metrics for cyber security which are easy to record and report on. For example, the number of suspicious emails blocked by your mail filter.

While that metric tells you that your mail filter is working, it doesn’t actually tell you how well you’re protected. If it blocked more emails this month than last, does that mean you’re better protected, or just that your organisation received more suspicious emails?

For sure, you need to have a mail filter as part of your cyber protections, but if you spent twice as much on it, would you get twice the protection level?

Linking investment to protection

Executives holding the purse strings want to know how effective their spend on security measures is going to be so that they can prioritise budgets.

“Outcome-driven metrics” are those which directly link investment to protection. I’ll illustrate this with 2 examples: patching and phishing.

Patching (which I talked about in Episode 6, A stitch in space and time) is a crucial component in your cyber security strategy. The longer you leave systems unpatched, the longer you are exposed to vulnerabilities and the higher the risk of compromise. The number of unpatched systems in your organisation is one possible metric you can measure and report on. Scanning tools like Nessus and Qualys provide this information, and we provide this information to you in a Cyber Security Assessment.

However, the number of unpatched systems by itself isn’t a very useful metric, because it doesn’t tell you whether systems were unpatched for 1 day or 9 months, so it’s not a meaningful measure of the risk. It’s a “backwards looking” metric which doesn’t predict how well protected you’re going to be going forward.

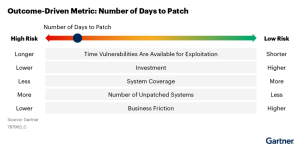

What you want to establish is a measure which can be used as a basis for discussion about risk and investment levels. That “forwards looking” metric is “number of days to patch”.

You reduce the risk by reducing the patching windows, increasing the coverage of systems being patched, and reducing the number of unpatched systems. This is nicely illustrated in the infographic below from Gartner.

It is up to the executive team to determine what level of risk the organisation is happy to accept from having unpatched systems. Is it 1 week? 1 month? 3 months? Or more? Do critical line of business systems have different patching targets?

What we often find in our Cyber Security Assessments is that patching is done on more or less a “best endeavours” basis. Notice the bottom line in the infographic. Better patching means higher business friction. And (excuse the pun), there’s the rub. I said a lot about this in Episode 6 (A stitch in space and time), the essence being that executive decisions on risk reduction should be the key driver which supports this.

We know that patching is a strong protective measure for reducing cyber risk and so executive teams should be determining patching windows at policy level based on a defined risk appetite. Once established, you can have a discussion with your implementation teams about delivering those patching targets. Do they need systems which can automate more of the process? Do they need more headcount? Decision-making on investment in resourcing will have a direct impact on your ability to meet patching levels and therefore manage the risk.

Teach someone to catch a phish…

We know that in our sector, 90% of compromises leading to a cyber incident begin with phishing emails which are designed to harvest user credentials (usernames and passwords).

However good your anti-phishing email protections are, some are going to make it through. So you need a layered defence, and your people are your greatest asset when it comes to spotting phishing emails.

But it doesn’t just happen by magic. You need to train staff, and do it regularly. That means running phishing training campaigns to test their awareness and security consciousness. You want people to be reporting suspicious emails and not clicking on the bait. Measuring the reporting rates and number of “click-throughs” on phishing emails are good metrics to report on, and link directly to investment.

Again, Gartner has a nice infographic illustrating the impacts on risk. Increase your investment in phishing training, and the number of click-throughs should reduce, resulting in a lower number of phishing-related incidents, and a consequent reduction in remediation costs.

Bear in mind that in the education sector, the recovery time from a ransomware incident is anywhere from 10-24 days, with full recovery from serious incidents running into several months. The average cost is over £2m per incident.

Those numbers put into perspective the investment in training.

Seeing the bigger picture

What other metrics are useful to review?

Microsoft provides a SecureScore as a percentage value for your MS365 cloud tenancy. It’s a useful starting point, but it’s imperfect because it reflects security measures which Microsoft thinks you should be applying. These may or may not be appropriate to your organisation, and therefore the SecureScore value needs to be treated with some caution. But it’s useful to keep any eye on, especially as cloud security measures evolve over time and best practice recommendations are revised.

Other useful metrics include: numbers of critical and high risk vulnerabilities; numbers of inactive user accounts; percentage of users with multi-factor authentication enabled.

Dashboards are the ideal way to present the numbers, especially over time. Microsoft PowerBI is a great tool for doing this and can make interactive dashboards available to anyone who needs access, with the capability to drill down into the data to get greater granularity.

Don’t look away

How often should you be reviewing metrics? The answer depends on who’s looking.

I’d expect operational teams to be keeping an eye on things on a daily basis, with at least a weekly deep dive to identify any challenges.

For wider oversight, I’d expect an information security review group to be reviewing these monthly, and an executive or audit committee to be reviewing quarterly, as a minimum.

A Final [Deep] Thought

In the next episode of A Hitch-Hacker’s Guide to the Galaxy (It’s a Zero Trust Game), we’ll be looking at network security challenges: how to stop attackers getting in, and how to minimise their room for manoeuvre if they do.

For now, you can take useful steps forward by asking your information security team to report some baseline metrics so that you can start to understand what normal looks like.

James Bisset is a Cyber Security Specialist at Jisc. He has over 25 years experience working in IT leadership and management in the UK education sector. He is a Certified Information Systems Security Professional, Certified Cloud Security Professional, and is a member of the GIAC Advisory Board.