A Hitch-Hacker’s Guide to the Galaxy – Developing a Cyber Security Roadmap for Executive Leaders

A Hitch-Hacker’s Guide to the Galaxy – Developing a Cyber Security Roadmap for Executive Leaders

In this blog series, I am looking at steps that your organisation can take to build a roadmap for navigating the complex world of cyber security and improving your cyber security posture.

There’s plenty of technical advice out there for helping security and IT teams who are responsible for delivering this for their organisations. Where this advice is lacking is for executive leaders who may or may not have technical backgrounds but are responsible for managing the risk to their organisations and have to make key decisions to ensure they are protected.

This blog series aims to meet that need, and provide you with some tools to create a roadmap for your organisation to follow to deliver cyber security assurance.

Each post focuses on one aspect to consider in your planning, and each forms a part of the Cyber Security Assessment service which we offer to our member organisations in the UK Higher and Further Education sector, as well as customers within Local Government, Multi-Academy Trusts, Independent Schools and public and private Research and Innovation. To find out more about this service, please contact your Relationship Manager, or contact us directly using the link above.

View all episodes.

Episode 6: A stitch in space and time

“A common mistake that people make when trying to design something completely foolproof is to underestimate the ingenuity of complete fools.” Douglas Adams, A Hitchhiker’s Guide to the Galaxy

[ Reading time: 11 minutes ]

A fool’s paradise?

Computers aren’t foolproof. We frequently attempt to use them in ways that designers never envisaged, and they fail regularly. Thankfully, we no longer have to endure the “blue screen of death” which was a regular feature of early Windows systems, but look at the event logs of any computer, tablet or smartphone, and you’ll see numerous entries indicating where things have gone wrong.

The trouble is that although we’re not aware of our computers failing as often or as catastrophically as we used to in the old “blue screen” days, failure is a fact of life. We shouldn’t really be surprised by this. Computers are the most sophisticated machines we have ever created, with many sub-systems that were often designed with purposes in mind which were different from how they are used in practice. Link them together into networks, and the failure possibilities multiply exponentially.

Unfortunately, when they do fail, computers don’t always fail safely. And that’s where the lack of fool-proofing becomes a problem, because those unsafe states of failure can be discovered and exploited by malicious actors, which is why cyber security is such an important and challenging area of risk to manage.

On top down under

In 2017, the Australian Cyber Security Centre published their Essential Eight guidance on cyber defence in which they claim that implementing these 8 measures mitigates 85% of cyber risks. The first 2 measures are “patching applications”, and “patching operating systems”. Patching just means keeping the software up to date.

It’s not glamorous, and requires no shiny new kit, but makes a huge difference to your security posture.

It sounds obvious, but it means you need to know what software you have, so that you know what you need to update. I talked about that in Episode 2 (Know thyself).

Software needs to be updated because security flaws are being discovered all the time, and software vendors provide update “patches” to remedy those flaws. Fail to apply the patch and you leave yourself vulnerable.

Microsoft, Adobe and Oracle release monthly updates on the second Tuesday of each month (so called “Patch Tuesday”) and many systems and applications have options to auto-update. Many laptops, phones and tablets are set to automatically download and install updates, which often contains important security fixes.

Why patching doesn’t happen

Knowing what software you have to patch is one thing. Actually doing the patching is another, especially for large organisations, where it can be more difficult to manage, because there are lots of moving parts. An update that fixes a problem in one area can sometimes cause one somewhere else.

You don’t want updates to apply automatically overnight to find that that things have stopped working the following day. If a patch goes wrong, then systems need to be rolled back to the way they were—that in itself takes time, and it can take yet more to work out why it didn’t work and what to do about it.

And all the while, you remain vulnerable to the problem the patch was designed to fix in the first place.

It’s a particular challenge for your core line of business applications, like finance, HR, student records or email, because lots of people rely on these. You may have limited tolerance for taking these key systems offline to be updated, and may have to schedule updates for time windows that avoid the potential for disruption.

In bigger organisations, patching usually happens out of hours, to avoid disrupting normal business. That means evenings and weekends, and sometime overnight. Your patching teams need to eat and sleep too, though, so you have to make sure there’s enough resource in the team to allow for that. For smaller organisations, that’s a challenge, which often means that patching is delayed until holiday periods, leaving you vulnerable for weeks or months.

Any decision to defer patching means you are accepting the risk that is remediated by the patch, but it’s not usually viewed in those terms. Normally, people are more focussed on keeping systems running and maintaining business as usual.

Systems used in research and development may run on legacy systems that perform a valuable research function but are unsupported. Where you can’t patch, you need to segregate, and I’ll say more about that in Episode 8 (It’s a zero trust game).

Time to comply

Good patching isn’t just a security best practice. You need to be doing it, and prove that you are doing it, for Cyber Essentials and CE Plus. Compliance for these requires you to install critical security patches within 14 days of release or segregate

That’s a strong protective measure, but even if you have a patching policy that mandates this, it’s hard to achieve in reality.

There are tools available to automate and monitor patching status, but you still need someone to configure and manage these systems, and you’ll never achieve patching compliance without manual intervention.

So your patching policy has to be backed up with support for the teams that will be doing the work. Make sure that you have processes in place to review what systems need updating, and that you have plans in place for doing this with critical business systems.

What you mustn’t do is ignore the problem or kick it down the road. Leaving patching until the summer holiday where disruption is minimal isn’t a strategy, and won’t look smart if you have to deal with an incident resulting from an unpatched vulnerability.

Assign responsibility and accountability

Patching is critical to your security and responsibility for delivering it shouldn’t be left to your IT teams alone. They will be responsible for your core systems—like servers, switches and firewalls—but for others you should assign responsibility for patching to the system owners. Each system needs a business and technical owner: the business owner is accountable for the security of their system, including patching, and the technical owner (which is often the IT team) works with them to deliver this.

The system owner is the one who makes the risk decision when to patch. This decision should be in line with the organisational risk appetite, and documented. Patching schedules and expected downtime have to be agreed between system and technical owners, possibly by means of a protection level agreement. After all, the patching teams have to have the time and people available to do the work. If your teams can’t meet the patching levels required, you need to review whether your expectations are too high or if you need to provide more resource.

Watch the numbers

Unpatched systems are an important cyber risk indicator, and as executive leaders, you should make patching compliance a key target. Make sure you are reviewing this regularly and setting targets for your teams to achieve.

How do you get those numbers? Scanning tools like Nessus and Qualys can be used to identify unpatched systems and other security vulnerabilities on your network. These can be run independently of the teams doing the patching so you can be assured of the validity of the results. When we undertake a Cyber Security Assessment, we’ll scan your network internally and externally, report on vulnerabilities prioritised by criticality, and provide remediation advice, to support your teams in mitigating the risks.

If at first you don’t succeed, scan, scan again

These tools make use of mature threat and vulnerability intelligence services.

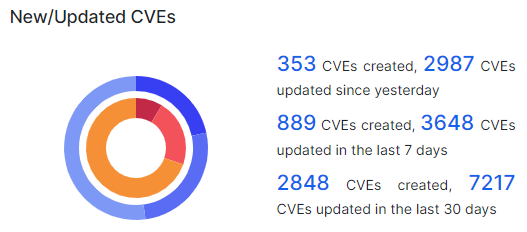

The Common Vulnerabilities Enumeration system was released in 1999, with the Common Vulnerability Scoring System released in 2005. The CVE database catalogues every vulnerability discovered with a CVE reference number, and attaches a CVSS score which indicates its severity. The database contains over 240,000 entries with around 300 new vulnerabilities reported daily, and 3000 updates to existing entries.

The developers of software applications and security products have access to the CVE databases, so developers can report vulnerabilities and release fixes for them, and anti-malware tools are regularly updated. Scanning tools like Nessus and Qualys also make use of this CVE database, so the scan results are fully up-to-date.

Because of the changing nature of the threat intelligence, you need to be scanning regularly. The scans are quick and easy to set up and run and automated reports can be delivered daily. That’s the easy part.

As we’ve seen, the hard part is remediating the findings, and that’s again where you have an important role to play. You need to ensure that information about vulnerabilities gets to the business system owners, and that there is sufficient capacity in the IT team to respond to patching requests.

The delivery teams also need support in prioritising patching work. The CVSS scores help with this. Start with the high priority issues and work down from there. However, not all vulnerabilities are solved just by a software update or configuration change, and sometimes fixing one problem will have an impact somewhere else. For example, updating the firmware on your network switches will cause a loss of network and internet connection while this happens, which even if it’s scheduled for overnight or at the weekend, could cause a problem for some people or systems.

Make it happen

You have a role in defining the patching policy so that it is clear to all stakeholders that this is an organisational priority. This supports the delivery teams in overcoming resistance when undertaking remediation activities. Provide your teams with tools that automate vulnerability scanning and patching processes as much as possible.

And hold your system owners to account. Patching compliance must be a key performance indicator for the organisation as a whole, and for each application, whether it’s a line of business or research activity.

A Final [Deep] Thought

In the next episode of A Hitch-Hacker’s Guide to the Galaxy, we’ll be looking at how to measure your cyber security risk and readiness. Known Unknowns…

For now, you can take useful steps forward by reviewing your organisation’s Patching Policy. Do you have one? Does it cover all your systems—including ones that aren’t managed by your IT teams, like Building Management and CCTV systems? Do you have the resource capacity to meet the 14 day patching window required for Cyber Essentials compliance? Have you assigned responsibilities to business and technical owners for patching? Do you regularly scan your systems for vulnerabilities? Do you review the findings? What is your risk appetite for running systems with unpatched vulnerabilities?

James Bisset is a Cyber Security Specialist at Jisc. He has over 25 years experience working in IT leadership and management in the UK education sector. He is a Certified Information Systems Security Professional, Certified Cloud Security Professional, and is a member of the GIAC Advisory Board.